The Turing Test, conceived by computing pioneer Alan Turing in 1950, has long been the gold standard for evaluating a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. But as we stand on the precipice of a new era in artificial intelligence, it’s time to ask: Has AI truly surpassed this benchmark, or have our expectations outgrown it?

With the advent of advanced models like GPT-4, the line between human and machine interaction has become increasingly blurred. These models can engage in conversations so convincingly human-like that the distinction can be challenging to make. However, the question remains: Does passing this test equate to genuine understanding, or are we merely observing sophisticated mimicry?

The Nuances of AI and the Turing Test

AI systems today, especially large language models like GPT-4, have achieved a level of sophistication that allows them to produce text that can often be indistinguishable from human-generated content. But does this mean they have passed the Turing Test? The answer is more complex than a simple yes or no. While these models can often trick a human into believing they are interacting with another person, there are still telltale signs of their artificial nature. These signs typically emerge in areas requiring deep contextual understanding, long-term coherence in conversation, and the ability to display genuine empathy—qualities that, for now, remain elusive for machines.

The Role of Inference in AI

Inference in AI is a critical component, referring to the process by which machines derive conclusions from data. In models like GPT-4, inference involves analyzing vast amounts of data to generate coherent and contextually appropriate responses. The leap from GPT-3 to GPT-4, for instance, illustrates significant advancements in this area, particularly in handling ambiguous queries and providing more nuanced responses. These improvements are not just due to increased computational power, but also to refined algorithms and access to larger, more diverse datasets.

Distinguishing GPT, ChatGPT, and the Transformer Algorithm

Understanding the distinctions between GPT, ChatGPT, and the Transformer algorithm is key to appreciating the nuances of AI technology today. The Transformer algorithm, introduced in a seminal paper by Vaswani et al. in 2017, is the foundation upon which models like GPT are built. This algorithm excels at processing sequences of data, making it particularly well-suited for tasks like language modeling.

GPT, or Generative Pre-trained Transformer, is a specific application of the Transformer architecture. It generates text by predicting the next word in a sequence, based on the words that came before. ChatGPT, on the other hand, is a variant of GPT fine-tuned specifically for conversational use. This fine-tuning involves additional training on dialogue datasets, allowing ChatGPT to better handle the intricacies of human conversation.

The Competitive Landscape: ChatGPT and Its Rivals

In the rapidly evolving AI landscape, ChatGPT is not without its competitors. Google’s DeepMind has introduced Gemini, a model known for its advanced reasoning capabilities. Anthropic’s Claude emphasizes safety and interpretability, striving to create AI systems that are both powerful and understandable. Grok, a project from Facebook AI Research, is making strides in conversational AI, while LLama by OpenAI focuses on large-scale language modeling with an eye toward accessibility and efficiency. Groq, meanwhile, distinguishes itself with AI accelerators designed to deliver unparalleled computational performance.

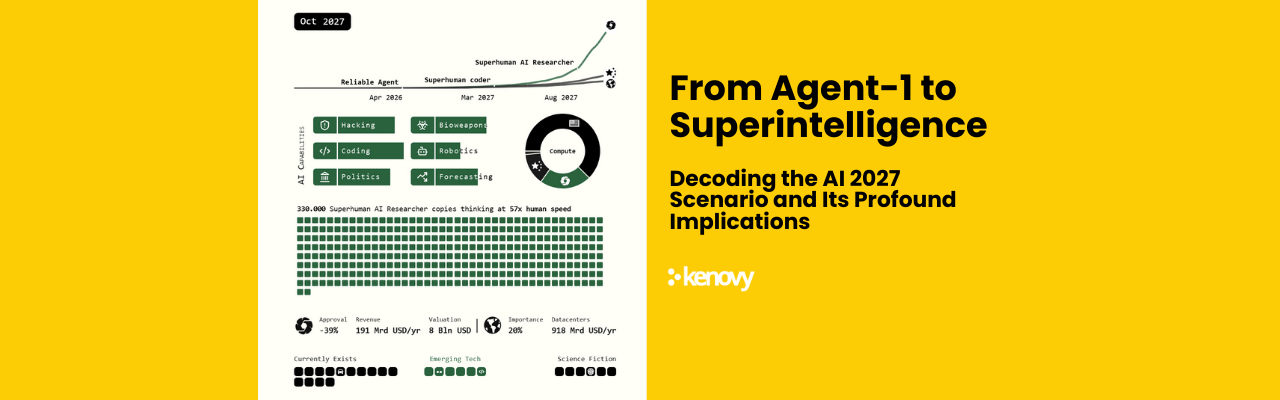

Each of these models brings something unique to the table, pushing the boundaries of what AI can achieve. As these technologies continue to evolve, the competition will only drive further innovation, making the next few years an exciting time for AI development.

The Future of AI: Beyond the Turing Test

While the Turing Test remains a valuable historical benchmark, it is no longer the definitive measure of AI’s capabilities. Today’s AI systems are evaluated not just on their ability to mimic human behavior, but on their ability to reason, understand, and even innovate. As we continue to push the frontiers of AI, understanding the concepts of inference, the advancements in models like GPT-4, and the subtle differences between various AI frameworks will be crucial for anyone looking to leverage these technologies in the digital age.