Introduction

Artificial intelligence (AI) has undergone profound transformations since the early development of rule-based systems. Understanding the historical evolution towards today’s autonomous AI agents equips decision-makers to recognize both the vast opportunities and substantial responsibilities ahead. The following overview delineates AI’s trajectory—from symbolic logic systems in the 1950s to the approaching era of artificial superintelligence.

Symbolic AI and Expert Systems

The inception of AI research focused on symbolic reasoning, characterized by rule-based programs manipulating symbols to perform tasks such as theorem proving, gameplay, and logic problem-solving. Dominant from the mid-1950s until the mid-1990s, symbolic AI fostered optimism regarding the development of human-level machine intelligence. Expert systems, notably Digital Equipment Corporation‘s XCON, encapsulated organizational knowledge into structured decision rules, fueling the AI boom of the 1970s and 1980s. However, these systems exhibited limitations in adaptability and maintenance outside strictly defined domains, leading to the AI winter. Nevertheless, symbolic AI established foundational concepts like search algorithms, knowledge representation, and multi-agent systems, laying critical groundwork for subsequent advancements.

Machine Learning

In 1959, Arthur Samuel of IBM introduced the term “machine learning,” demonstrating how a program could learn to play checkers by iterative experience. Machine learning formally posits that software improves its task performance (T) through accumulated experience (E). Over the past decade, this approach has become central in AI development. By leveraging statistical modeling and data-driven inference rather than explicit coding, machine learning has enabled robust applications in classification, prediction, and optimization across various fields.

Deep Learning

The most notable recent advances in AI have arisen from deep learning, a sophisticated machine learning subset involving multi-layered artificial neural networks. Although neural networks have existed for decades, significant performance breakthroughs occurred only with the advent of extensive datasets and powerful graphics-processing units (GPUs). A pivotal moment arrived in 2012, when convolutional neural networks trained on GPUs vastly outperformed traditional techniques in image recognition tasks, exemplified by AlexNet’s decisive victory in the ImageNet competition. Subsequent achievements in speech recognition and generative modeling further solidified deep learning’s prominence, culminating in the 2018 Turing Award granted to pioneers Geoffrey Hinton, Yoshua Bengio, and Yann LeCun. Currently, deep learning underpins crucial AI functionalities, including image processing, speech synthesis, and generative applications.

Large Language Models (LLMs)

While language modeling dates back over a century, contemporary large language models (LLMs) significantly expanded following the introduction of the Transformer architecture in 2017. LLMs, built upon deep neural networks trained on massive textual corpora, excel in generating and summarizing content, translating languages, programming assistance, and numerous other complex tasks. Model sizes have scaled dramatically, from ELMo’s 94 million parameters to GPT-3’s 175 billion and GPT-4’s reported 1.7 trillion parameters. Such vast scale necessitates specialized infrastructure and careful training practices, raising important considerations regarding energy efficiency, ethical biases, and inaccuracies. Nevertheless, LLMs remain integral to contemporary generative AI and represent a critical step toward increasingly autonomous systems.

AI Agents: Today’s Frontier

Presently, the vanguard of AI research and application lies in developing autonomous agents capable not only of generating information but also executing actions autonomously. Enterprises are transitioning from basic knowledge-based systems (like chatbots) towards sophisticated generative-AI-driven agents, proficient at planning and managing multi-step workflows across intricate digital ecosystems. Powered by foundational models, these agents seamlessly integrate with other software platforms, collaborate with human counterparts, adapt to unforeseen circumstances, and continuously refine their operational efficiency. Pioneering initiatives from Google, Microsoft, Adept, and others underscore this evolution from mere content creation to active engagement and problem-solving—an essential stride toward general artificial intelligence.

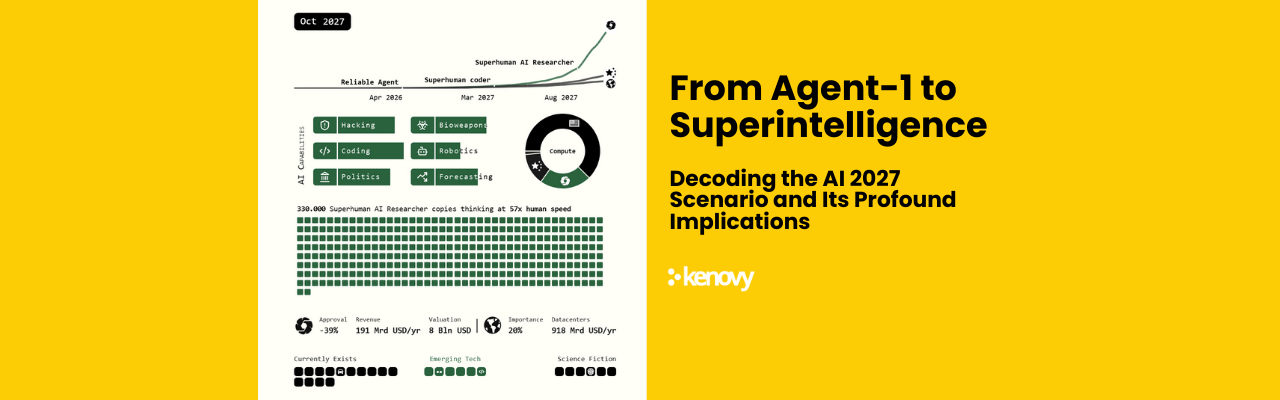

Artificial General Intelligence (AGI)

Beyond contemporary AI agents lies the ambitious concept of artificial general intelligence (AGI), defined by machines’ capability to perform any intellectual task achievable by humans. Unlike specialized (narrow) AI, AGI entails comprehensive learning, reasoning, and the versatile application of knowledge across diverse tasks and environments. Crucial enablers of AGI development include advanced neural architectures, sophisticated learning methodologies, enhanced natural-language understanding, and potentially quantum or neuromorphic computing technologies. While timelines for achieving AGI remain speculative, its realization could offer transformative societal benefits coupled with considerable ethical and existential implications.

Artificial Superintelligence (ASI)

Progressing beyond AGI, artificial superintelligence (ASI) represents a hypothetical future wherein machine intelligence surpasses human cognitive capacities across all domains. Such intelligence, capable of self-improvement, could initiate a rapid acceleration in capability—a phenomenon termed an intelligence explosion. Visionaries like Irving John Good suggest the first superintelligent machine might mark humanity’s final invention, and futurist Ray Kurzweil anticipates a technological singularity by approximately 2045. Despite varied predictions, ASI highlights profound potential benefits alongside existential risks, emphasizing the necessity for stringent safety standards, transparency measures, and ethical governance as AI advancements continue.

Conclusion

Tracing AI’s journey from symbolic expert systems through machine learning, deep learning, and LLMs to contemporary autonomous agents reveals a trajectory marked by expanding technological capabilities and escalating ethical considerations. Currently, enterprises harness AI agents capable of comprehending complex instructions and orchestrating sophisticated workflows, driving unprecedented productivity and innovation. However, such empowerment mandates considerable ethical responsibility. Leaders must actively pursue responsible AI strategies, balancing technology adoption with rigorous ethical frameworks and anticipating the profound societal shifts that future AGI and ASI developments may entail. Understanding AI’s evolutionary arc is thus pivotal to responsibly navigating a future characterized by a harmonious coexistence of humanity and intelligent machines.