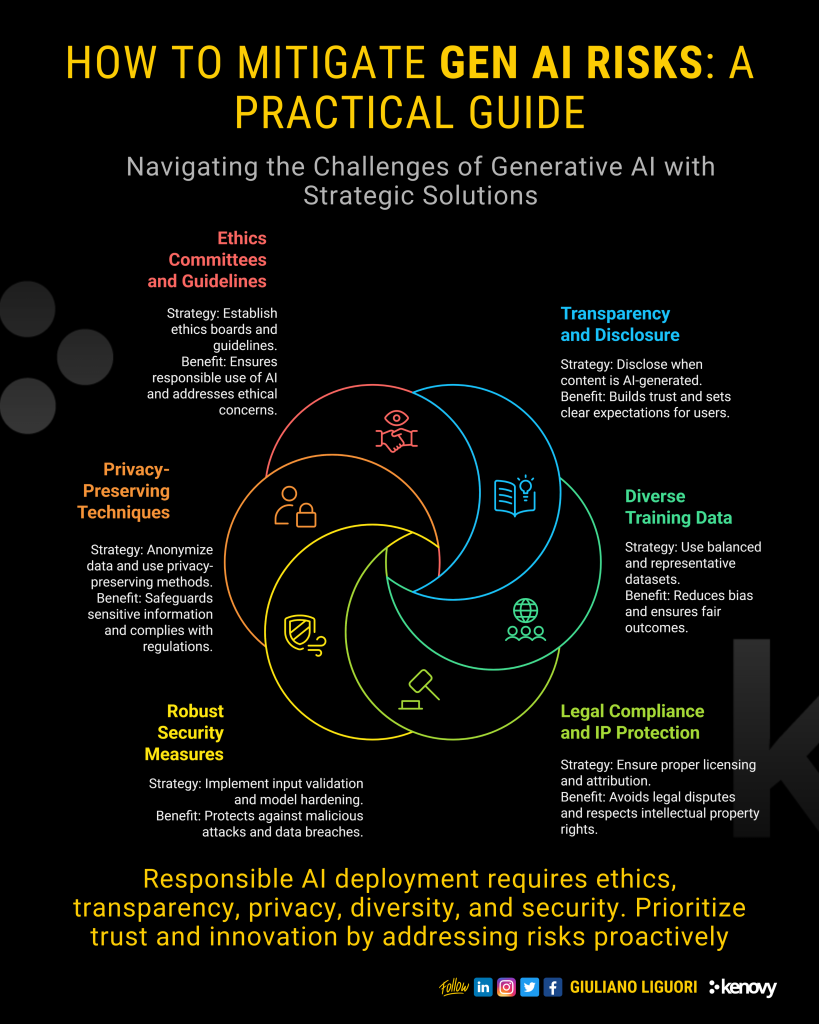

Generative Artificial Intelligence (Gen AI) is no longer a futuristic concept—it’s here, transforming industries, redefining creativity, and reshaping how we work. From generating realistic images and writing compelling content to automating complex tasks, Gen AI has proven its potential to drive innovation at scale. However, as with any powerful technology, it comes with its own set of risks that demand careful consideration and proactive management.

As professionals navigating this rapidly evolving landscape, it’s our responsibility to ensure that Gen AI serves as a force for good. In this article, I’ll explore the key risks associated with Gen AI and outline actionable strategies to mitigate them, empowering us to harness its capabilities responsibly.

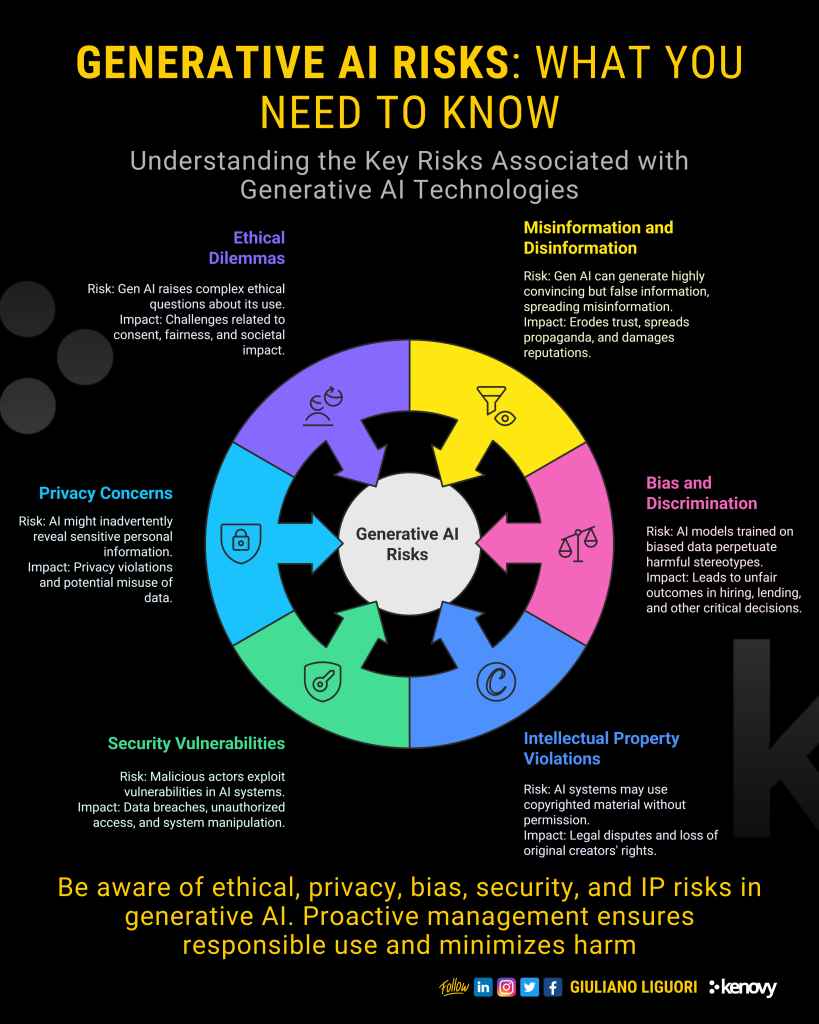

1. Misinformation and Disinformation: The Trust Challenge

One of the most pressing concerns with Gen AI is its ability to produce highly convincing yet false information. Deepfakes, fabricated news articles, and manipulated media can erode trust, amplify polarization, and even destabilize societies.

How to Mitigate:

- Transparency First: Always disclose when content is AI-generated. This builds trust and sets clear expectations for audiences.

- Verification Tools: Leverage watermarking technologies or blockchain-based systems to authenticate content.

- Fact-Checking Partnerships: Collaborate with fact-checking organizations to validate outputs before they reach the public.

By prioritizing transparency and accountability, we can combat misinformation while preserving the credibility of AI-generated content.

2. Bias and Discrimination: Ensuring Fairness in AI

AI models are only as unbiased as the data they’re trained on. If the training data reflects societal prejudices, the AI will perpetuate—or worse, amplify—those biases, leading to unfair outcomes in hiring, lending, healthcare, and beyond.

How to Mitigate:

- Diverse Data Sets: Ensure training data represents diverse demographics and perspectives.

- Bias Audits: Regularly test AI outputs for bias using fairness metrics and third-party evaluations.

- Human Oversight: Combine AI insights with human judgment to catch and correct biased decisions.

Fairness isn’t just an ethical imperative—it’s also good business. Companies that prioritize equity build stronger relationships with their customers and employees.

3. Intellectual Property Concerns: Respecting Creativity

The rise of Gen AI has sparked debates about intellectual property rights. When AI generates artwork, music, or text inspired by copyrighted material, who owns the output? What happens if proprietary data is inadvertently included in training datasets?

How to Mitigate:

- Data Licensing Agreements: Use properly licensed datasets to avoid copyright violations.

- Attribution Systems: Credit original creators when their work influences AI outputs.

- Legal Compliance Reviews: Stay informed about evolving IP laws and ensure your practices align with them.

Respecting IP rights not only protects creators but also fosters a culture of innovation built on mutual respect.

4. Security Vulnerabilities: Safeguarding Against Threats

Like any digital system, Gen AI is vulnerable to cyberattacks. Malicious actors could exploit prompt injection attacks, adversarial inputs, or reverse engineering to manipulate or extract sensitive information from AI systems.

How to Mitigate:

- Input Validation: Implement filters to block harmful or malicious prompts.

- Model Hardening: Train models to resist adversarial attacks through robust testing and simulation.

- Access Controls: Restrict access to AI systems based on user roles and permissions.

A secure AI ecosystem is foundational to maintaining trust and protecting valuable assets.

5. Privacy Concerns: Protecting Sensitive Information

Gen AI systems often process vast amounts of data, raising concerns about privacy breaches. If improperly handled, these systems could inadvertently reveal personal or confidential information.

How to Mitigate:

- Anonymization Techniques: Remove personally identifiable information (PII) from training datasets.

- Privacy-Preserving Methods: Adopt technologies like differential privacy or federated learning to safeguard individual data.

- Compliance with Regulations: Adhere to global privacy laws such as GDPR and CCPA to ensure robust data protection.

Prioritizing privacy isn’t just about compliance—it’s about earning and retaining user trust.

6. Ethical Dilemmas: Balancing Innovation with Responsibility

Gen AI raises profound ethical questions. Should we simulate deceased individuals? Is it ethical to automate jobs that displace workers? How do we define acceptable use cases for this technology?

How to Mitigate:

- Ethics Committees: Establish internal boards to evaluate the societal impact of AI applications.

- Clear Guidelines: Develop and enforce ethical frameworks for deploying Gen AI.

- Stakeholder Engagement: Involve diverse voices—including ethicists, community leaders, and end-users—in decision-making processes.

Ethical leadership ensures that technological progress aligns with human values.

7. Environmental Impact: Building a Sustainable Future

Training large Gen AI models consumes significant computational resources, contributing to carbon emissions and environmental harm. As stewards of innovation, we must address this growing concern.

How to Mitigate:

- Energy-Efficient Models: Optimize algorithms to reduce energy consumption during training and inference.

- Green Computing Practices: Power data centers with renewable energy sources.

- Lifecycle Assessments: Evaluate the environmental footprint of AI projects and prioritize sustainability.

Sustainability isn’t optional—it’s essential for long-term success.

8. Societal Polarization: Bridging Divides

Personalized content generated by AI can reinforce echo chambers, deepening divides within society. While customization enhances user experience, it can also isolate individuals from differing viewpoints.

How to Mitigate:

- Algorithmic Transparency: Make recommendation algorithms transparent and explainable.

- Diverse Content Exposure: Design systems to expose users to a variety of perspectives.

- User Control: Allow users to customize their experience and opt out of overly personalized recommendations.

Promoting inclusivity strengthens communities and fosters meaningful connections.

Moving Forward Together

Generative AI holds immense promise, but realizing its full potential requires vigilance, collaboration, and a commitment to responsible innovation. By addressing the risks outlined above—misinformation, bias, security threats, and more—we can build a future where AI enhances human potential without compromising our values.

As professionals, let’s lead by example. Let’s advocate for transparency, champion fairness, and prioritize sustainability. Together, we can shape a world where Gen AI becomes a tool for empowerment, not exploitation.

What steps is your organization taking to navigate the risks of Gen AI? Share your thoughts in the comments—I’d love to hear your perspective!